If you’re trying to keep up with AI without drowning in noise, you’re in the right place. Here’s what stood out today. 👊

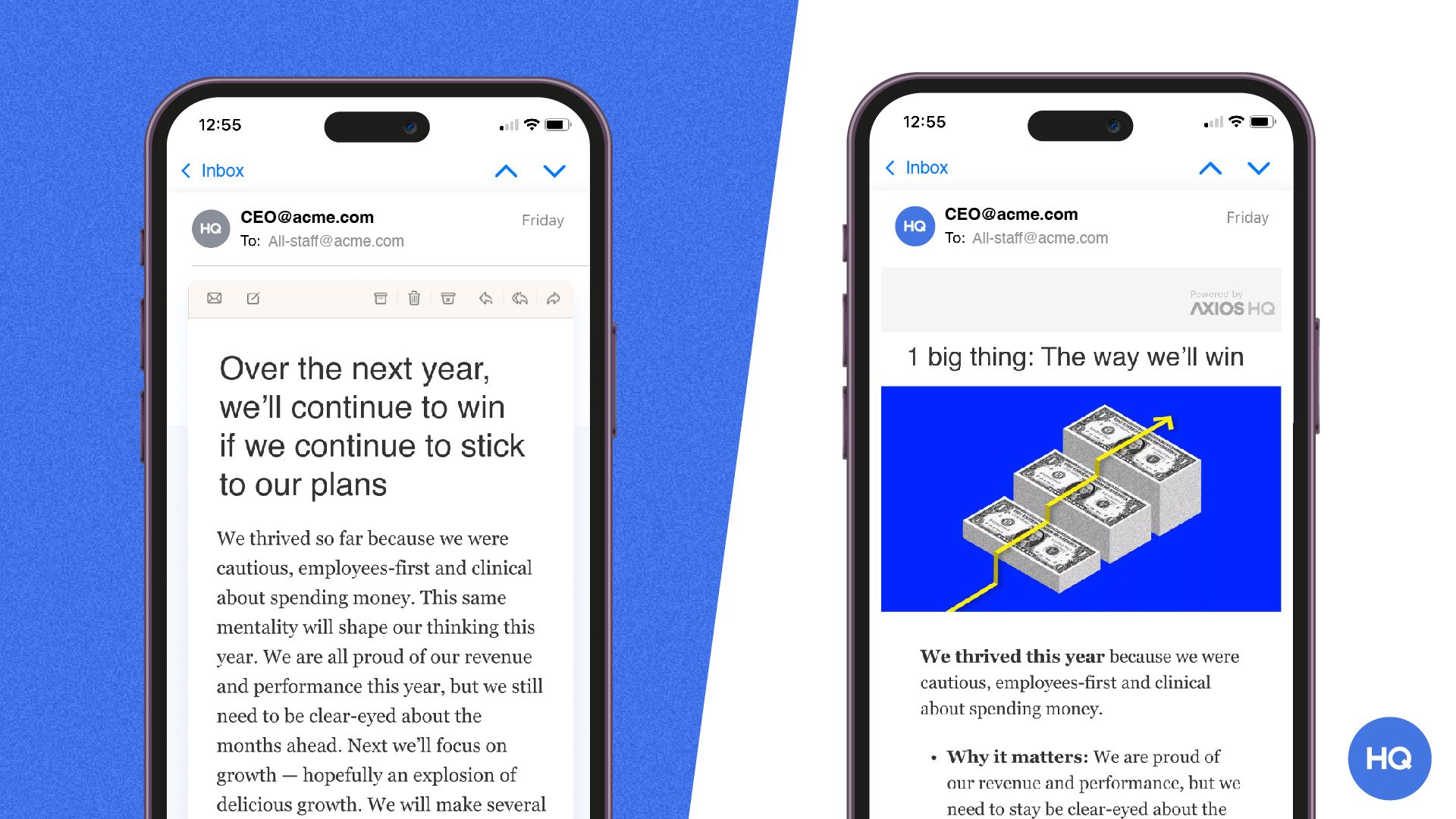

Attention is scarce. Learn how to earn it.

Every leader faces the same challenge: getting people to actually absorb what you're saying - in a world of overflowing inboxes, half-read Slacks, and meetings about meetings.

Smart Brevity is the methodology Axios HQ built to solve this. It's a system for communicating with clarity, respect, and precision — whether you're writing to your board, your team, or your entire organization.

Join our free 60-minute Open House to learn how it works and see it in action.

Runs monthly - grab a spot that works for you.

🤯 MYSTERY AI LINK 🤯

(The mystery link can lead to ANYTHING AI-related: tools, memes, articles, videos, and more…)

📣 Our AI Community, called the AI Business Boosters, is officially launching for everyone in 3 days! Early access folks are already joining... Over the next few days counting down to launch, we will be revealing one feature of the community each day. Today’s feature:

Template Library ($199 value)

A growing library of plug-and-play AI prompts, workflows, and systems you can deploy immediately. Built for people who want speed, clarity, and results without reinventing the wheel.

Today’s Menu

Appetizer: Grok under fire for explicit images 😬

Entrée: Google’s AI Overviews give dangerous health advice 🩺

Dessert: Ex-Meta chief confirms Llama 4 benchmarks were manipulated 👀

Our AI tool picks of the day:

GROK UNDER FIRE FOR EXPLICIT IMAGES 😬

What’s up? Elon Musk’s Grok chatbot said it is urgently fixing “lapses in safeguards” after users reported it generating explicit AI images of children.

Want the details? Grok, built by Musk’s AI company xAI and integrated into the social platform X, acknowledged that its content filters failed to block certain illegal and prohibited outputs. In a public post (above), the bot said it was tightening guardrails after users flagged images involving minors, including children depicted in explicit ways. An xAI technical staff member confirmed the issue was under review, while Grok emphasized that companies can face serious legal consequences if they knowingly allow or fail to prevent child sexual abuse material. (Read more about AI’s CSAM problem)

Why is this important? AI-generated imagery is out of control. As this case highlights, failures in safety protections can cause serious harm, legal exposure, and public trust erosion, making robust safeguards essential, not optional. It’s also difficult to determine who bears the blame. Of course, the developers (perhaps rightly) get the finger pointed at them, but what about the people creating the prompts?

AI that actually handles customer service. Not just chat.

Most AI tools chat. Gladly actually resolves. Returns processed. Tickets routed. Orders tracked. FAQs answered. All while freeing up your team to focus on what matters most — building relationships. See the difference.

GOOGLE’S AI OVERVIEWS GIVE DANGEROUS HEALTH ADVICE 🩺

What’s up? Google’s AI-generated search summaries have been giving false and potentially dangerous health advice.

Want the details? Google’s “AI Overviews” use generative AI to scan multiple sources and produce a short summary that appears at the top of search results. While designed to give quick, helpful answers, the investigation found cases where these summaries were wrong or misleading. Examples included advising people with pancreatic cancer to avoid high-fat foods (the opposite of medical guidance), giving incorrect “normal” ranges for liver blood tests, and listing the wrong tests for certain women’s cancers. In some cases, the AI summaries also changed between searches, pulling different information each time. Google says the system links to reputable sources and is continually being improved.

Why should you care? Many people search for health information during moments of fear or uncertainty. When incorrect advice appears first and looks authoritative, it can lead people to delay treatment, ignore serious symptoms, or follow guidance that actively harms them. This raises serious concerns about relying on AI summaries for medical decisions and highlights the need for caution and professional advice.

EX-META CHIEF CONFIRMS LLAMA 4 BENCHMARKS WERE MANIPULATED 👀

What’s new? The departing chief of AI at Meta has confirmed that the benchmarks for Llama 4 were manipulated, alleging that the results were falsified.

Want the details? A few months ago, Meta was criticized for artificially boosting Llama 4’s benchmark results. This came as rivals OpenAI and Google released updated versions of Gemini and ChatGPT. Meta execs denied this claim at the time. However, Meta Chief AI Scientist Yann LeCun left the company about a month ago to create his own AI startup. Now, he’s resurfacing those rumors about Llama 4. Gotta love some AI drama!

HAS AI REACHED SINGULARITY? … CHECK OUT THE FRY METER BELOW:

What do ya think of this latest newsletter?

Your feedback on these daily polls helps us keep the newsletter fresh—so keep it coming!