A new week means a new batch of fresh and crispy AI news! 🗞

Today’s Menu

Appetizer: AI-generated product reviews are causing a stir 😖

Entrée: OpenAI to release ChatGPT app for Android 📱

Dessert: White House meets with AI leaders 🏛

🔨 AI TOOLS OF THE DAY

🏡 Staypia: An AI-powered hotel reservation system that offers the best deals on over 3.16M hotels worldwide. → check it out

🗣 Lingostar: A language learning tool that offers real conversations to help learners reach fluency without the fear of making mistakes.→ check it out

📊 One Connect Solutions: A platform that empowers businesses to make data-driven decisions efficiently. → check it out

AI-GENERATED PRODUCT REVIEWS ARE CAUSING A STIR 😖

Product reviews don’t only help people find the best product, but they can also be quite entertaining! 🤣

What’s happening? Generative AI is beginning to write human-sounding reviews for products in mass quantities. This is being met by AI trained to detect AI-generated reviews, causing an AI war of sorts.

Why is this important? This battle has implications for consumers as well as for the future of online reviewing. 90% of consumers say they read reviews while shopping online, so these reviews have critical implications for the selling of products. Some “fake review farms” have even formed, selling fraudulent reviews in masses which can hurt competition or promote a company’s products. Teresa Murray, who directs the consumer watchdog office for the U.S. Public Interest Research Group, said, “Already, AI is helping dishonest businesses spit out real-sounding reviews with a conversational tone by the thousands in a matter of seconds.”

Can this problem be solved? Ben Zhao, a professor of computer science at the University of Chicago, said it’s “almost impossible” for AI to rise to the challenge of snuffing out AI-generated reviews, because bot-created reviews are often indistinguishable from human ones. As AI continues to improve and learn from human inputs and data, detection will continue to become increasingly difficult. If this continues to be the case, other solutions might have to be formulated, then, to address these concerns. 😐

OPEN-AI TO RELEASE CHATGPT APP FOR ANDROID 📱

ChatGPT is on the move! Literally … it’s going mobile … again.

What’s new? OpenAI announced its intention to release the well-known AI chatbot, ChatGPT, for Android users this week. This comes after its previous debut on iOS devices in May.

What does this mean? This release by OpenAI highlights their commitment to making ChatGPT more accessible to the public. This app will have improved security measures compared to the web version, which will better protect user privacy. It will also offer conversation history synchronization across devices, similar to the iOS version. 🦾

WHITE HOUSE MEETS WITH AI LEADERS 🏛

From left: CEO of Amazon Web Services Adam Selipsky, President of OpenAI Greg Brockman, President of Meta Nick Clegg, CEO of Inflection AI Mustafa Suleyman, CEO of Anthropic Dario Amodei, President of Google Kent Walker and Microsoft vice chair and president Brad Smith (ABC)

The U.S. government has fallen behind on regulation, so AI companies are coming together in a collective effort to make the AI landscape safer and more sustainable. 🙏

What happened? On Friday, President Joe Biden held a meeting with prominent tech leaders at the White House. The purpose of this meeting was to emphasize the recent efforts made by these companies in enhancing safety and transparency concerning emerging AI technology.

What came of it? During the meeting, seven major AI companies—Amazon, Anthropic, Google, Inflection, Meta, Microsoft, and OpenAI—collectively and voluntarily announced their commitment to several initiatives:

They pledged to allow external security testing of their AI products. This move is aimed at ensuring that their technologies undergo rigorous scrutiny and assessment by independent experts to identify and address potential vulnerabilities.

They committed to sharing valuable data with governments, civil society, and academia to manage the risks associated with AI technology. By fostering collaboration and transparency, they aim to collectively address potential challenges and concerns that may arise from the widespread use of AI.

They agreed to implement a system to acknowledge content generated by their AI products using digital watermarks. This measure seeks to promote accountability and traceability, allowing users to identify content created by AI systems easily.

These voluntary initiatives were described by the White House as "pushing the envelope on what companies are doing and raising the standards for safety, security and trust of AI."

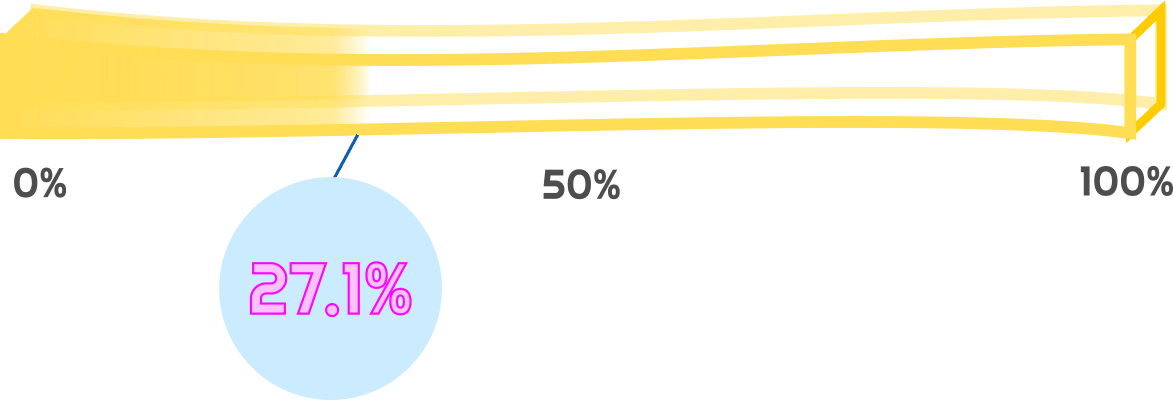

HAS AI REACHED SINGULARITY? CHECK OUT THE FRY METER BELOW

For the first time ever the Fry Meter is above 27%! This is largely due to the leak of AppleGPT.