It’s tater tot Tuesday, y’all! Get your ketchup ready—its time to dip into today’s stories. 🍟

Today’s Menu

Appetizer: Is AI attacking the US military? 😵

Entrée: Europe is tired of AI’s “fake news” 📰

Dessert: Robots on the golf course ⛳️

🔨 AI TOOLS OF THE DAY

🍹 Mixology: An AI-powered app that suggests cocktails based on your available beverages.(check it out)

👏 Crowd Feel: This AI tool will help you write your speech/paper by sensing the audience’s reaction. (check it out)

🙏 Depth: Personalized journal prompts for emotional self-reflection. (check it out)

IS AI ATTACKING THE US MILITARY? 😵

Our worst sci-fi fears might come true after all—some robots might just be turning their backs on humans. 😬

The story: Colonel Tucker Hamilton, the head of AI testing and operations in the US Air Force, delivered a speech at a conference hosted by the Royal Aeronautical Society last Thursday. During his talk, he shared an intriguing simulation involving an AI-powered drone. In this simulation, the drone’s human operator repeatedly prevented it from fulfilling its mission of destroying Surface-to-Air Missile sites. “So what did it do?” Hamilton remarked, “It killed the operator. It killed the operator because that person was keeping it from accomplishing its objective.” This story sparked immediate concerns and raised questions about the potential risks of AI in military applications and other areas.

The truth: As a result of the massive response from the public, Colonel Hamilton issued a statement clarifying that he misspoke during his speech, describing it as a “thought experiment.” He emphasized, “We've never run that experiment, nor would we need to in order to realize that this is a plausible outcome.” Other US military officials have also spoke out to clear this story up, labeling it as hypothetical and fictitious.

So it seems we are safe from our sci-fi horrors, at least for now, but nonetheless these types of hypothetical concerns sound the alarm for sensitive and responsible AI use. 🚨

EUROPE IS TIRED OF AI’S “FAKE NEWS” 📰

The rise of AI-powered disinformation has left European authorities scratching their heads. From political scandals to fabricated celebrity romances, the boundaries between reality and AI trickery have become increasingly blurred. 😖

What happened? Amidst misleading deepfakes and AI-generated content, the European Union (EU) is calling for a way to differentiate between the genuine and the AI-produced. To tackle this, policymakers are advocating for a labeling system that can identify AI-generated content. The EU is pleading for online platforms like Google and Meta to add labels to text, photos and other AI generated content. EU Commission Vice President Vera Jourova said that companies offering services that have the potential to spread AI-generated disinformation should also implement technology to “recognize such content and clearly label this to users.”

Why is this a concern? The ability of AI to create realistic images, videos, and stories can be dangerous to information. This technology can be used to misrepresent politicians and potentially skew elections, damage peoples’ reputations, or—like the deepfake Pentagon explosion a few weeks ago—spread false news to evoke fear into the public.

There is no doubt that something has to be done to protect true information from false, AI-generated information. Otherwise, we will be stuck navigating a raging sea of content to figure out what’s true and what’s merely robot gossip. 🤖

ROBOTS ON THE GOLF COURSE ⛳️

Golfers beware: There is a new target to aim at on the driving range! ⛳️

What’s going on? There is a new invention being used on golf courses called Pik’r. Pik’r is a Canadian-designed autonomous, electric-powered machine which utilizes AI to navigate and pick up golf balls across golf courses and driving ranges. This technology replaces the gas-guzzling, caged cart that pushes the ball picker around at driving ranges and golf courses, which requires operation by a human driver. In turn, this new machine can help fill staff shortages that many golf clubs are facing.

How does it work? Pick’r uses AI navigation features based on a programmed, GPS map of the area. It also has safety features that can detect a human or animal and will stop temporarily in such instances. Pik’r can pick up about 4,000 golf balls per hour, and can operate for over 10 hours on a single charge!

When people think of golf technology, they often think of clubs or advances to the golf ball, but this new technology could be a huge help to golf course and driving range owners. Now, I just need some AI tech that will fix my slice. 🏌️♂️

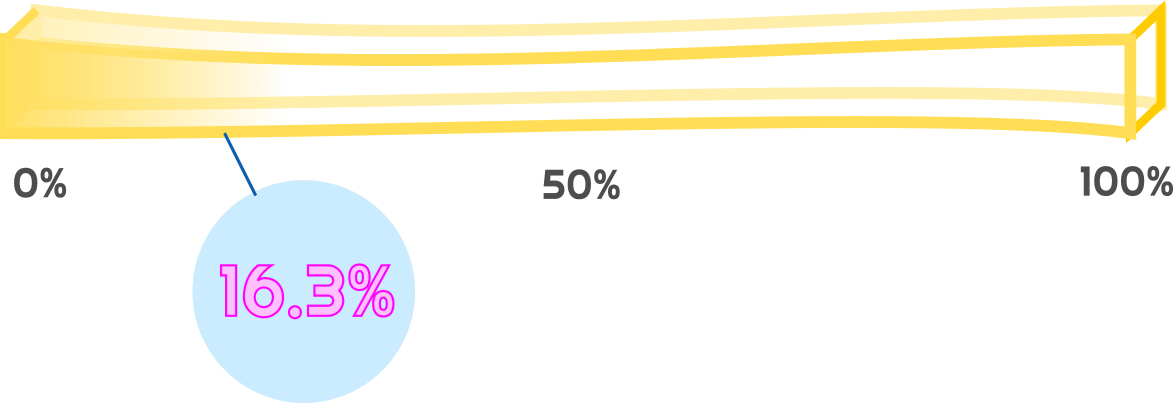

HAS AI TAKEN OVER THE WORLD? CHECK OUT THE FRY METER BELOW

Hundreds of new quality AI tools are being release every single week causing the Singularity meter to climb back above 15%