Welcome to this week’s Deep-fried dive with Fry Guy! In these long-form articles, Fry Guy conducts an in-depth analysis of a cutting-edge AI development. Today, our dive is about the AI Chef. We hope you enjoy! 🙂

Get your plates ready, because researchers from the University of Cambridge have programmed an AI-powered, robotic chef that might just give Guy Fieri a run for his money!

Robotic chefs are nothing new to the tech industry, as many factories and restaurants have programmed robots for mass production of food products for years. However, this new robot has something different about it: it possesses a mind of its own! Unlike regularly programmed machines often used in cooking, these Cambridge researchers have developed a robot which utilizes AI algorithms to learn from cooking videos and come up with its own, improved recipes.

HOW DOES IT WORK?

Grzegorz Sochacki, a PhD candidate in Professor Fumiya Iida’s Bio-Inspired Robotics Laboratory, and his colleagues devised eight simple salad recipes and filmed themselves making them. They then presented these videos to their AI-powered bot. After watching each of these videos, the AI Chef was able to identify which recipe was being prepared and replicate the salad on its own. Sochacki remarked, "We wanted to see whether we could train a robot chef to learn in the same incremental way that humans can—by identifying the ingredients and how they go together in the dish.”

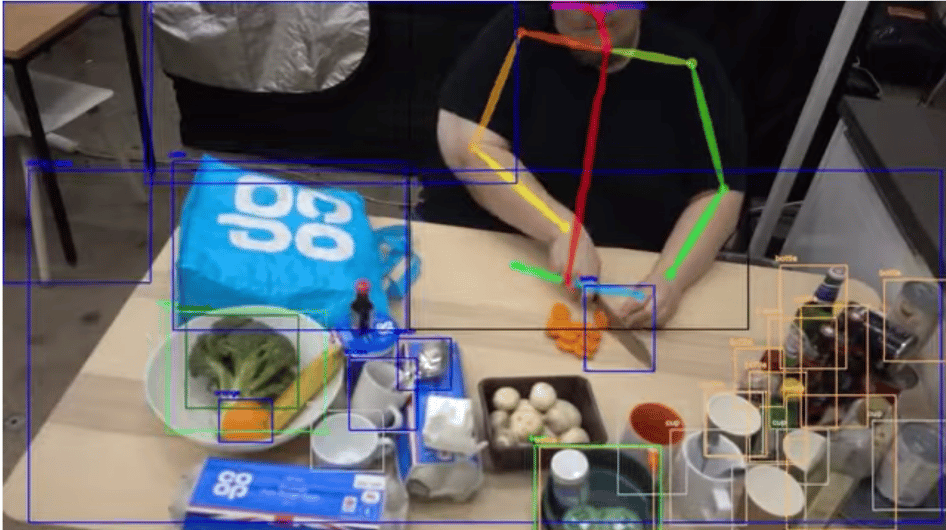

The researchers began by programming the AI Chef to recognize common items like a knife and the ingredients, such as lettuce, tomatoes, peppers, broccoli, and carrots. The AI Chef was also programmed to recognize the human demonstrator's arms, hands, fingers, and face. These correlations were computed based on paths extracted by the machine learning model YOLO (You Only Look Once) and openpose neural networks. The recipes and videos were then transformed into vectors, and mathematical operations were conducted by the robot to ascertain the similarity between a demonstration and a vector. Using these computer vision techniques, the robot analyzed each frame of the videos made by the researchers and was able to identify the different objects and features.

By accurately recognizing the components involved in the video and observing the actions of the human chef, the robot was able to deduce the specific recipe being prepared. For instance, if the human chef held a knife in one hand and a carrot in the other while the knife hand was moving the blade down through the carrot, the robot would infer that the carrot was being sliced or chopped.

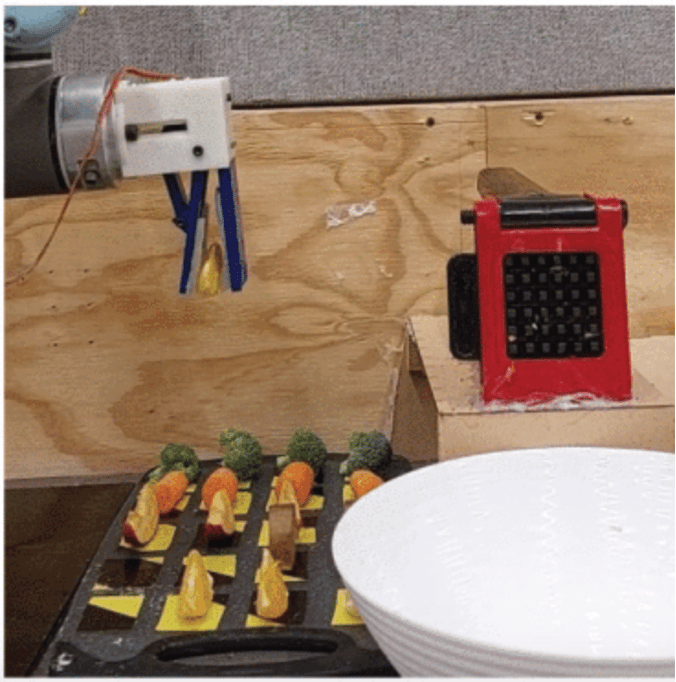

The ingredients were placed in predefined positions in front of the AI Chef’s UR5 robotic arm. This arm was programmed using Python, which allowed for the integration of robotic arm control and computer vision. The researchers began by programming the basic movements of the robot first, such as moving to the home position, the opening and closing of the gripper, and moving on leverage points at a basic speed. These movements were then combined with more complex movements and put together as comprehensive functions. The researchers reported, “An example function of this kind consists of opening the cutter, picking up a piece of carrot, moving it to the home position, then dropping it inside the fry cutter and cutting it.”

The Cambridge researchers showed the AI Chef 16 videos, where the order of ingredients in one of the eight recipes was the reverse of the other. Out of these 16 trials, the AI-powered bot recognized the correct recipe 15/16 times (93.7% accuracy). The AI Chef was also able to detect variations in the recipe, such as a double portion of broccoli, and was able to differentiate these variations from another recipe entirely. In this way, the robot understood the capacity for human error and was able to understand how some flavors mixed with others. Sochacki remarked, "It's amazing how much nuance the robot was able to detect. These recipes aren't complex—they're essentially chopped fruits and vegetables, but it was really effective at recognizing, for example, that two chopped apples and two chopped carrots is the same recipe as three chopped apples and three chopped carrots." Because of this machine learning ability, the AI Chef was ultimately able to come up with its own, original ninth recipe. This paves the way for future, AI-originated creations.

HOW CAN THE AI CHEF IMPROVE FOR THE FUTURE?

The AI Chef has the potential to learn from a variety of videos and create customizable recipes that could revolutionize the food industry, but first there is some further developing to be done. This starts with the detection algorithms and videos being used by the AI Chef.

The study conducted by these Cambridge researchers did not come without some errors. These errors occurred during the detection and assembly of ingredients in the salad-making process. The researchers detected the number of ingredients in the recipe for each variant and compared it to the actual number of ingredients added by the AI Chef. They found that in some cases, the number of ingredients extracted was incorrect. These errors included both double detections of an ingredient and missing some ingredients altogether. Specifically, in one demonstration of a recipe, one key ingredient was not detected, resulting in an incorrect recipe classification. This error was significant because this particular recipe only had two ingredients, making the missed detection of one ingredient a relatively large error.

Despite these errors, the system was able to correctly recognize all ingredients in 83% of demonstrations and, as previously stated, accurately predict the intended recipe of the human cook in 93.7% of cases.

Another threat to this technology’s ability to correctly detect ingredients and track recipes is the type of video being used. When people think about cooking shows or videos that go viral on social media, they often think of videos with fast cuts and a lot of visual effects. These popular videos tend to move back and forth between the food and the cook quickly, and this could throw off the AI Chef who is trying to pinpoint sequential actions. As Sochacki said, "Our robot isn't interested in the sorts of food videos that go viral on social media—they're simply too hard to follow."

It is also difficult for the AI Chef to identify blocked objects. For example, if a human cook is covering an ingredient with their hand, this could be difficult for the AI Chef—in its current programming—to identify. In the videos made by the researchers, the demonstrator had to hold up the ingredient towards the camera or make it almost entirely visible for the bot to identify it. The developers plan on overcoming these obstacles through machine learning methods which are aimed at the AI recognition of ingredients and objects from a wider data set with different angles and in different lighting. Sochacki believes these further developments will allow for a wider range of learning and recipe building. He stated, “As these robot chefs get better and faster at identifying ingredients in food videos, they might be able to use sites like YouTube to learn a whole range of recipes."

The Cambridge researchers hope that their report, published in IEEE Access, will facilitate more convenient and cost-effective implementation of robotic, AI-powered chefs. The study's findings also showcase the potential of machine learning to utilize visual observation, which opens the floodgates for further AI developments in a plethora of fields.