Happy Tuesday! The week is heating up, so let’s add some sizzling AI insights to keep the momentum going. 🔥

🤯 MYSTERY AI LINK 🤯

(The mystery link can lead to ANYTHING AI-related: tools, memes, articles, videos, and more…)

Today’s Menu

Appetizer: Meta collects data from everything you see 🤓

Entrée: AI comes to Gmail for iOS 📧

Dessert: AI sees what radiologists can’t 🩻

🔨 AI TOOLS OF THE DAY

👍 Layerpath: Create interactive product demos in minutes. → Check it out

🤝 Odoo: An all-in-one business platform. → Check it out

💻 Lumona: A search engine powered by social media. → Check it out

META COLLECTS DATA FROM EVERYTHING YOU SEE 🤓

Q: What did the boy robot say to the girl robot he had a crush on?

A: He asked if he could data, of course. 💘

What’s going on? Recent reports have revealed that any image, voice, or video shared with Meta AI via their Ray-Ban smart glasses may be used to train the company’s AI models.

“[I]n locations where multimodal AI is available (currently U.S. and Canada), images and videos shared with Meta AI may be used to improve it per our Privacy Policy.”

How does this work? Meta’s Ray-Ban smart glasses boast a variety of AI-driven features (see above video). By just looking at their surroundings, users can ask the AI glasses for information such as what the healthiest dish on a menu is or for a history about the building they are looking at. When the smart glasses are “prompted” in this way, the image, video, and voice data are sent to Meta for analysis. Meta has clarified that photos and videos captured with the Ray-Ban smart glasses aren’t used for AI training unless the user submits them to Meta AI for analysis, so it’s not taking in everything users are looking at—only when they ask about it. Once submitted, however, these media files fall under different policies, which allow Meta to utilize them for AI development. The only way to “opt out” is to simply not use Meta’s smart glasses in the first place.

Why is this concerning? This practice raises concerns as users might unintentionally send personal images—such as those from inside their homes or featuring loved ones—into Meta’s data stockpile. With new features rolling out, including live video analysis, the risk of sharing sensitive data increases. While Meta points to its privacy policies for transparency, the full implications of using the AI features may not be fully understood by consumers.

AI COMES TO GMAIL FOR IOS 📧

I hope this story finds you well … ⌨️

What’s going on? Google is rolling out Gmail Q&A for iOS. Powered by the Gemini AI assistant, this feature helps users manage their inbox more efficiently.

Want the details? Initially launched for web and Android devices last month, this feature allows users to ask the AI questions about the contents of their Gmail or Google Drive, such as retrieving lost contact information, summarizing emails, or finding company data. With the Q&A feature, users can easily surface unread messages, locate emails from specific senders, or even get answers to general Google search queries directly within their inbox. This tool aims to streamline email management by quickly pulling relevant information from a crowded inbox or linked Google Drive.

When will this be available? Currently, the Gmail Q&A feature is limited to Google One AI Premium subscribers and Google Workspace accounts with specific add-ons. While the rollout is underway, it may take a couple of weeks for all eligible users to access the feature on iOS.

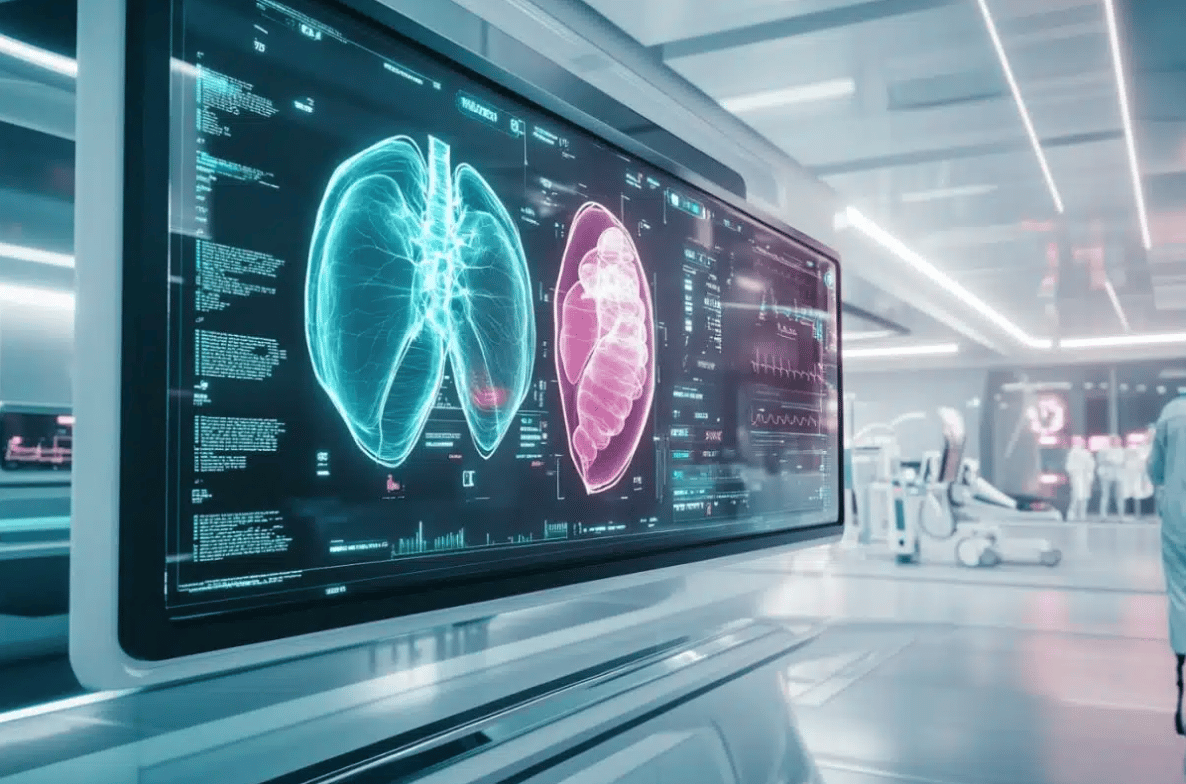

AI SEES WHAT RADIOLOGISTS CAN’T 🩻

I tried to lie to my radiologist, but she saw right through me. 👁️

What’s happening? Penn AInSights, an advanced AI-based imaging system developed at the University of Pennsylvania, is revolutionizing radiology by creating detailed 3D views of internal organs.

How does it work? Trained on a vast dataset of “normal” organs, Penn AInSights analyzes over 2,000 scans each month, helping clinicians detect conditions like fatty liver disease and diabetes at an early stage. The system is able to catch slight defects in organs imperceivable to the human eye. This allows for “opportunistic screening,” identifying potential health risks even when the primary scan focus is elsewhere. Penn AInSights also integrates seamlessly into current workflows, providing results in just 2.8 minutes per scan.