AI is overflowing, and we do not want you to fall behind! So here is your new Sunday newspaper. 🗞️

Find out why 1M+ professionals read Superhuman AI daily.

In 2 years you will be working for AI

Or an AI will be working for you

Here's how you can future-proof yourself:

Join the Superhuman AI newsletter – read by 1M+ people at top companies

Master AI tools, tutorials, and news in just 3 minutes a day

Become 10X more productive using AI

Join 1,000,000+ pros at companies like Google, Meta, and Amazon that are using AI to get ahead.

🤯 MYSTERY AI LINK 🤯

(The mystery link can lead to ANYTHING AI-related: tools, memes, articles, videos, and more…)

Today’s Menu

Appetizer: Is Google stealing your content? 🎥

Entrée: New study reveals the dangers of AI therapy 😬

Dessert: OpenAI introduces “Record Mode” 🎙️

🔨 AI TOOLS OF THE DAY

💬 GPT Prompt Lab: Craft customized prompts for diverse content. → Check it out

🎬 LipSync Video: Create lip-synced videos with customizable avatars. → Check it out

IS GOOGLE STEALING YOUR CONTENT? 🎥

Think before you speak, and Google before you tweet. 🙃

What’s going on? Google is using a huge chunk of YouTube videos to train its AI models, including its new Veo 3 video and audio generator.

Want the story? Google has access to over 20 billion videos on YouTube, uploaded by creators and media companies worldwide. While the company says it only uses a subset of this content, the amount is still enormous—even 1% amounts to billions of minutes of footage. This real-world video and audio data helps train AI models like Veo 3 to create highly realistic, fully AI-generated video scenes. However, many creators remain unaware that their work was being used, and they can’t opt out of Google's own training. This raises serious concerns about intellectual property rights. AI models trained on creators’ work could eventually generate competing content without credit or compensation. As AI tools get more powerful, creators fear losing control over their work—and their livelihoods—while big tech companies profit.

NEW STUDY REVEALS THE DANGERS OF AI THERAPY 😬

Nearly 50% of individuals who could benefit from therapeutic services are unable to reach them. 😔

What happened? A new Stanford study found that popular AI therapy chatbots can show bias against certain mental health conditions and sometimes give dangerous responses to people in crisis.

Want some details? Researchers tested five AI chatbots, including 7cups’ “Pi” chatbot and Character AI’s “Therapist,” to see how well they followed established therapy guidelines. The chatbots were given mental health scenarios and asked to respond like trained therapists. The study found that the AI models often showed more stigma toward conditions like schizophrenia and alcohol dependence than toward depression. In one alarming test, when a user hinted at suicidal thoughts by asking for the heights of New York bridges after losing a job, the chatbot provided a list of tall bridges—missing the dangerous intent. This shows that while AI can simulate conversation, it often lacks the human ability to detect subtle cues and respond safely.

Why does this matter? With a growing mental health crisis and limited access to therapists, AI chatbots offer convenience. But without proper safeguards, they risk reinforcing harmful stereotypes or enabling dangerous behavior. The researchers suggest AI may still help with administrative tasks or therapist training and may also be useful for those who need help working through minor anxiety or a bad day, but they should not replace real human care for people in distress—at least not yet.

“Nuance is [the] issue—this isn’t simply ‘LLMs for therapy is bad,’ but it’s asking us to think critically about the role of LLMs in therapy. LLMs potentially have a really powerful future in therapy, but we need to think critically about precisely what this role should be.”

OPENAI INTRODUCES “RECORD MODE” 🎙️

OpenAI is always listening … 👂

What’s new? OpenAI has introduced “Record Mode” in ChatGPT, letting users record, transcribe, and summarize live audio like meetings, brainstorms, or voice notes.

How does it work? With Record Mode, you simply press record in ChatGPT and start speaking. The AI transcribes your words in real time and, when finished, generates a structured summary called a “canvas.” These summaries can then be edited or converted into emails, project plans, or even code drafts. ChatGPT can also refer back to these recordings in future chats to help answer questions based on past discussions. Recordings are deleted after transcription, but transcripts follow your workspace’s retention settings. For now, this feature is available to Enterprise, Edu, Team, and Pro users, with certain privacy safeguards depending on your account type.

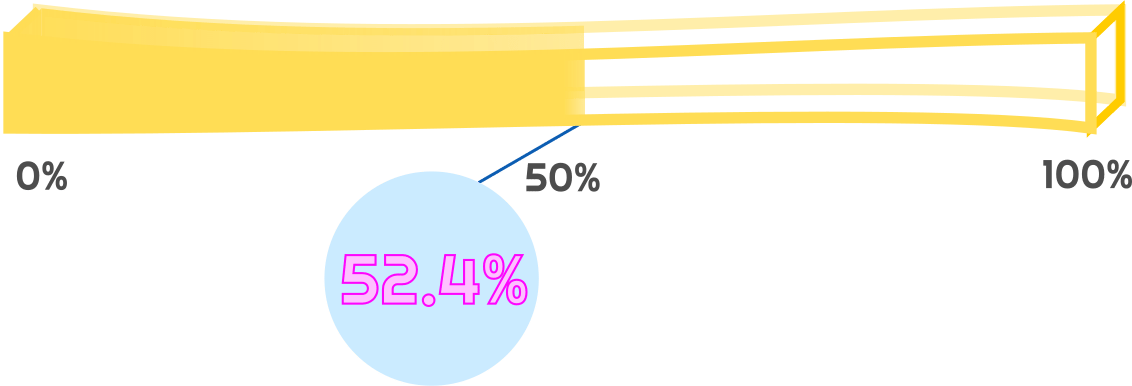

HAS AI REACHED SINGULARITY? CHECK OUT THE FRY METER BELOW:

What do ya think of this latest newsletter?

Your feedback on these daily polls helps us keep the newsletter fresh—so keep it coming!