Good morning, and happy FRY-day! We are cooking up some tasty treats, just for you. 😋

🤯 MYSTERY AI LINK 🤯

(The mystery link can lead to ANYTHING AI related. Tools, memes, articles, videos, and more…)

Today’s Menu

Appetizer: Google to charge for AI search features 💰

Entrée: Stability AI launches Stable Audio 2.0 🎵

Dessert: Is this “AI-assisted genocide” or a helpful tool? 💣

🔨 AI TOOLS OF THE DAY

🤖 Futureproof: This AI attempts to predict the future of your job or role in an AI world and gives you advice on how you can be better prepared for it. → check it out

🙏 DearGod: Write letters to God and explore the Bible, with the guidance of AI. → check it out

🤣 LlamaGen: Turn text into stunning and engaging comic images and strips. → check it out

GOOGLE TO CHARGE FOR AI SEARCH FEATURES 💰

Q: Why is dough another word for money?

A: Because everyone kneads it. 🍞

What’s new? Google is considering introducing charges for “premium content” generated by AI via its SGE (Search Generative Experience). This would mark the first time ever that Google has charged for search features.

Want the details? Although Google has not officially confirmed these plans, reports suggest that the company is exploring the idea of placing certain AI-powered search features behind a paywall, while traditional Google search features will remain free. The purpose for doing so is to leverage AI capabilities within their search engine while also protecting revenue streams. As AI features remain expensive to run on the backend, it is becoming more and more crucial that companies which power these systems find ways to remain financially sustainable while keeping costs practical for customers.

STABILITY AI LAUNCHES STABLE AUDIO 2.0 🎵

Q: What type of music are balloons afraid of?

A: Pop. 🎈

What’s new? Stability AI has unveiled Stable Audio 2.0, a significant leap in audio and music generation technology.

What does it do? Following its debut in September 2023, Stable Audio captured attention by enabling users to conjure short audio snippets from mere text prompts. Now, with the 2.0 iteration, users can delve deeper into creativity, fashioning full-fledged, three-minute audio tracks—double the length capacity of the previous version. This upgrade not only extends duration but also broadens capabilities, introducing audio-to-audio generation alongside text inputs. This new model was also trained exclusively on a licensed dataset from Audiosparx, which promises fair compensation for creators and honors opt-out requests.

“Since the initial release, we have dedicated ourselves to advancing its musicality, extending the output duration, and honing its ability to respond accurately to detailed prompts. These improvements are aimed at optimizing the technology for practical, real-world applications.”

IS THIS “AI-ASSISTED GENOCIDE” OR A HELPFUL TOOL? 💣

AI has been making waves in the military, and it has caused quite the ethical stir! 🌊

What’s happening? The Israeli military has been using an AI-powered database and targeting system known as “Lavender” to identify military targets for bombing in Gaza. This has alarmed human rights and technology experts who said it could amount to “war crimes”.

What is Lavender? According to +972, “The Lavender system is designed to mark all suspected operatives in the military wings of Hamas and Palestinian Islamic Jihad (PIJ), including low-ranking ones, as potential bombing targets.” Reports have indicated that “the army [has] almost completely relied on Lavender, which clocked as many as 37,000 Palestinians as suspected militants—and their homes—for possible air strikes.” Al Jazeera’s Rory Challands, reporting from occupied East Jerusalem, confirmed this, stating, “That database is responsible for drawing up kill lists of as many as 37,000 targets.”

What’s the problem? Many people are concerned about the irresponsible deployment of this AI system. Unnamed Israeli intelligence officials who spoke to media outlets about the matter said Lavender had an error rate of about 10 percent, but was being used regardless. Marc Owen Jones, an assistant professor in Middle East Studies and digital humanities at Hamid Bin Khalifa University, says that the Israeli army is “deploying untested AI systems … to help make decisions about the life and death of civilians.” He continues, “Let’s be clear: This is an AI-assisted genocide, and going forward, there needs to be a call for a moratorium on the use of AI in the war.” The Israeli army has attempted to refute these allegations, noting that the technology is not a “system,” but instead “simply a database whose purpose is to cross-reference intelligence sources in order to produce up-to-date layers of information on the military operatives of terrorist organizations.” Regardless of which side of the story is true, one thing is for sure: this is just the beginning of ethical concerns surrounding AI in military applications.

FRY-AI FANATIC OF THE WEEK 🍟

Congrats to our subscriber, jfiddler! 🎉

Jfiddler gave us a fresh and crispy and commented, “I can’t live without this newsletter.”

(Leave a comment for us in any newsletter and you could be featured next week!)

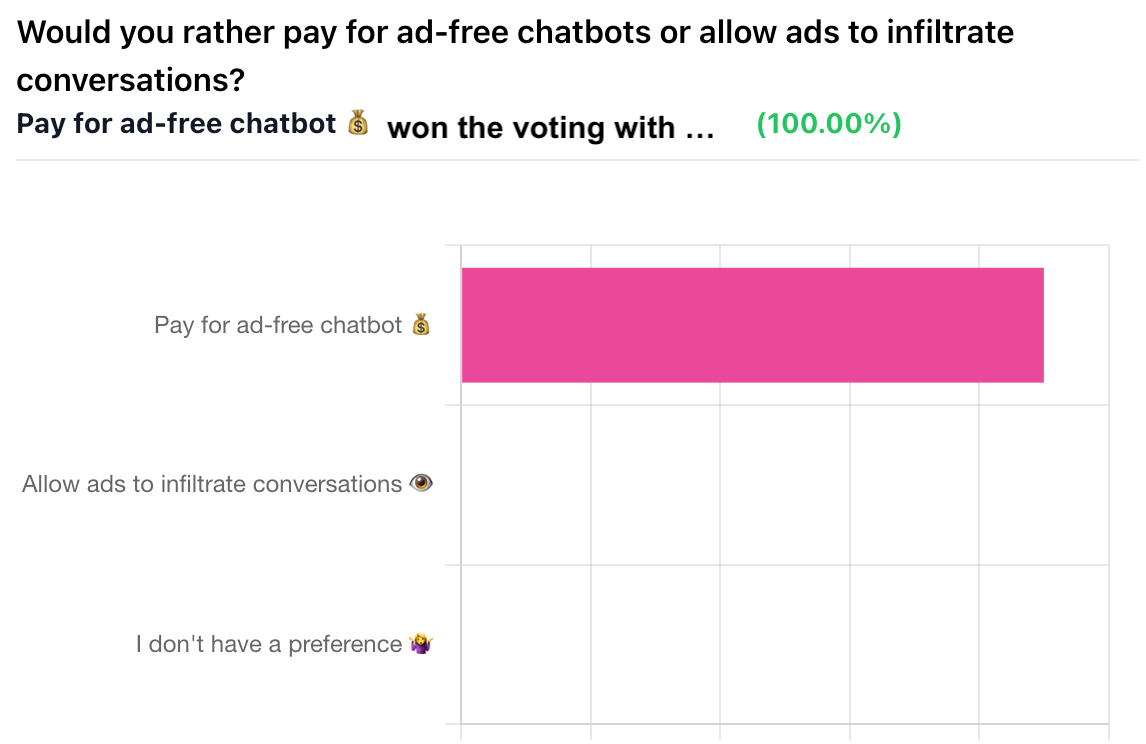

YESTERDAY’S POLL RESULTS 📊

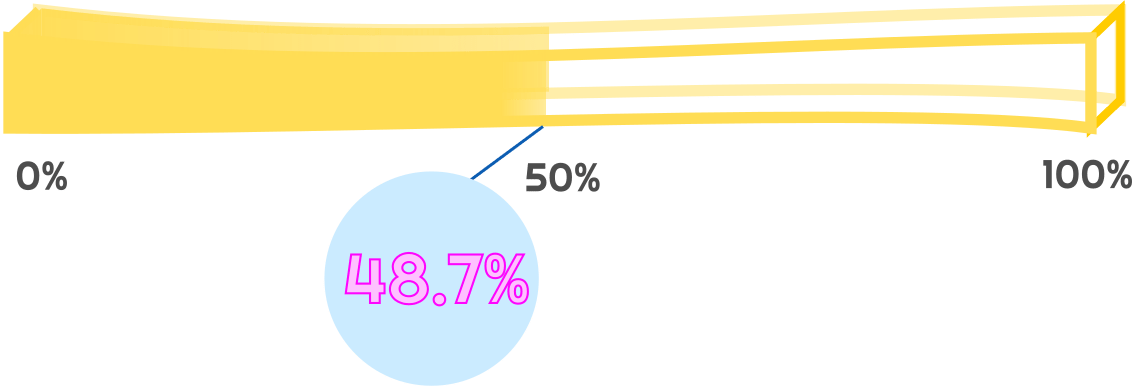

HAS AI REACHED SINGULARITY? CHECK OUT THE FRY METER BELOW

The Singularity Meter Rises 2.5%: AI is being used in War

(The Guardian)