Good morning! Let’s start your week off right: with the freshest and crispiest AI news, tools, and insights in the world. 🌎

🤯 MYSTERY AI LINK 🤯

(The mystery link can lead to ANYTHING AI-related: tools, memes, articles, videos, and more…)

Modernize your marketing with AdQuick

AdQuick unlocks the benefits of Out Of Home (OOH) advertising in a way no one else has. Approaching the problem with eyes to performance, created for marketers with the engineering excellence you’ve come to expect for the internet.

Marketers agree OOH is one of the best ways for building brand awareness, reaching new customers, and reinforcing your brand message. It’s just been difficult to scale. But with AdQuick, you can easily plan, deploy and measure campaigns just as easily as digital ads, making them a no-brainer to add to your team’s toolbox.

Today’s Menu

Appetizer: OpenAI tries to reduce political bias in ChatGPT 🫷

Entrée: Figma’s design platform gets Gemini integration 🎨

Dessert: What is the “AI homeless man prank”? 😳

🔨 AI TOOLS OF THE DAY

🗣️ Chatquick: Turn your voice into an audiobook or podcast. → Check it out

🔎 Brave: Privately browse the internet without being tracked by advertisers, malware, and pop-ups. → Check it out

OPENAI TRIES TO REDUCE POLITICAL BIAS IN CHATGPT 🫷

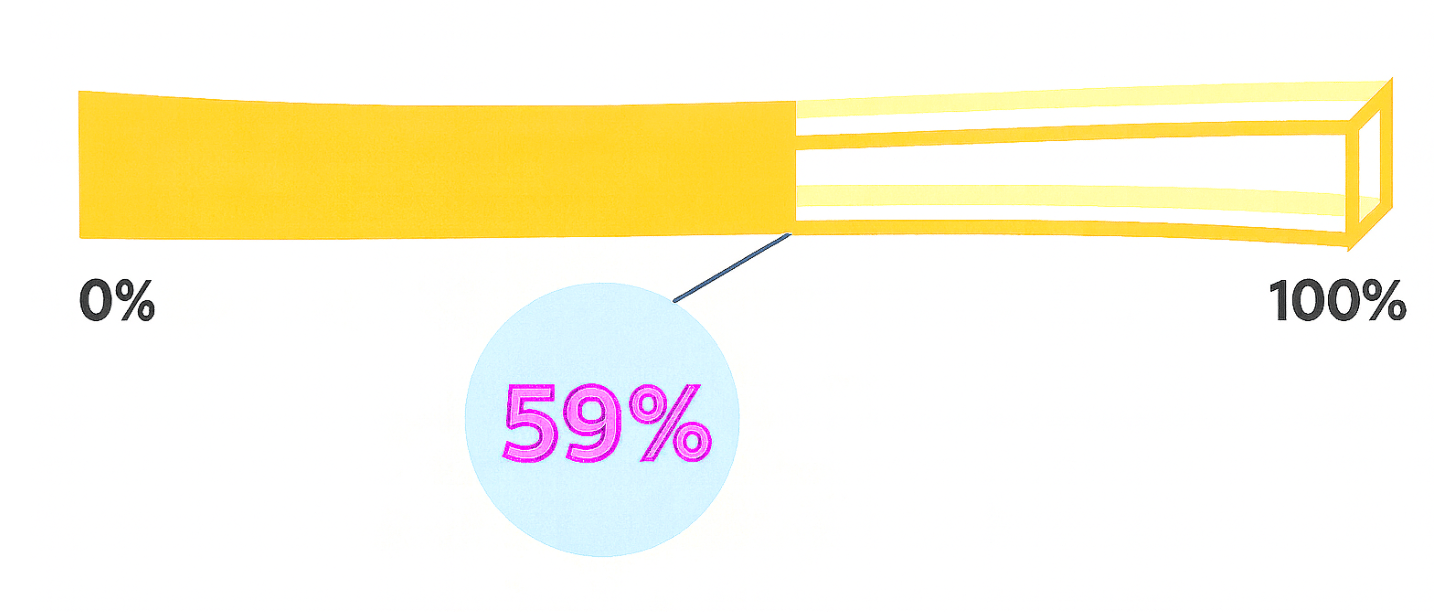

What’s up? OpenAI says its newest GPT-5 models are the most politically neutral versions of ChatGPT yet, following months of internal “stress tests” designed to spot and reduce bias.

Want the details? The company tested ChatGPT’s responses to 100 politically sensitive topics—like immigration and abortion—phrased in both liberal and conservative ways. Each prompt was run through four versions of the model, including GPT-4o and the latest GPT-5 instant and GPT-5 thinking. Another AI model then graded ChatGPT’s replies, flagging signs of bias such as dismissing a user’s viewpoint, emotionally escalating a question, or expressing personal political opinions. GPT-5 models scored 30% lower on bias than previous versions, showing particular improvement when handling highly charged prompts.

“ChatGPT shouldn’t have political bias in any direction.”

Why is this significant? Political bias has been one of the biggest complaints about AI chatbots, especially from conservatives. Millions of people trust ChatGPT daily for answers to all kinds of questions, so political bias in such machines has been criticized as a form of manipulation and “brainwash.” Mitigating bias can help maintain political fairness in machine-generated communication. However, it’s important for users to acknowledge that LLMs will never be truly “neutral,” and users should always carefully evaluate ethically or politically sensitive responses.

FIGMA’S DESIGN PLATFORM GETS GEMINI INTEGRATION 🎨

What’s new? Figma announced a new partnership with Google to integrate its Gemini AI models directly into the design platform.

How will this work? Through the deal, Figma will add Gemini 2.5 Flash, Gemini 2.0, and Imagen 4 to its popular design platform, improving image generation and editing. Notably, these tools will power Figma’s “Make Image” feature, allowing users to generate and modify AI images with text prompts. Early tests showed a 50% reduction in latency, meaning faster design responses. Figma also continues to rely on Google Cloud for hosting and infrastructure, ensuring smoother integration and scalability.

Why does this matter? This partnership shows how AI leaders are embedding their models into popular tools to reach millions of creators. For designers, it means faster workflows, fewer manual edits, and more creative flexibility—while for Google, it’s another step in making Gemini the go-to AI engine across industries.

200+ AI Side Hustles to Start Right Now

From prompt engineering to AI apps, there are countless ways to profit from AI now. Our guide reveals 200+ actionable AI business models, from no-code solutions to advanced applications. Learn how people are earning $500-$10,000 monthly with tools that didn't exist last year. Sign up for The Hustle to get the guide and daily insights.

WHAT IS THE “AI HOMELESS MAN PRANK”? 😳

@.raespencer I’m dying 😂 #ai#prank#geminiai#google

What’s going on? A viral TikTok trend called the “AI homeless man prank” is spreading across social media, using AI to create fake images of strangers appearing inside people’s homes.

How does this work? The trend began when popular creators used AI image generators to produce realistic photos of unknown men supposedly inside their houses—even asleep on the bed—and sent them to loved ones as a prank. The videos, often captioned with hashtags like #homelessmanprank, have drawn millions of views and inspired tutorials on how to make similar fakes. But as the prank spread globally, police departments in the U.S., U.K., and Ireland issued warnings after several people called 911 believing the images were real.

Why should you care? The trend highlights the growing danger of AI-generated misinformation. What seems like a joke can spark panic, waste emergency resources, and dehumanize real people—showing how easily AI can blur the line between reality and fabrication.

HAS AI REACHED SINGULARITY? CHECK OUT THE FRY METER BELOW:

What do ya think of this latest newsletter?

Your feedback on these daily polls helps us keep the newsletter fresh—so keep it coming!