It’s FRY-day, and that is our favorite day of the week! Why? Because it means more fresh and crispy AI news. 🍟

Today’s Menu

Appetizer: AI-driven bus leaves students stranded 🚌

Entrée: AI is headed to space 🪐

Dessert: New AI-generated news reporting guidelines 🗞️

🔨 AI TOOLS OF THE DAY

👩⚖️ AI Judge: Offers unbiased online verdicts to aid dispute resolution, leveraging AI for fair and just decisions. → check it out

🛩️ Inca.fm: Your interactive AI tour guide for every place. → check it out

💪 Zing Coach: AI-powered fitness tool that streamlines the process of evaluating user fitness levels. → check it out

AI-DRIVEN BUS LEAVES STUDENTS STRANDED 🚌

Q: How do you get Pikachu on a bus?

A: You poke-em-on. 😁

What happened? An AI-driven bus route, intended to offer seamless transportation for students in the largest school district in Louisville, Kentucky experienced a defect yesterday, resulting in the failure to adhere to the scheduled pick-up and drop-off of children, leaving them stranded.

What is the background? The district was facing a bus driver shortage and decided to spend nearly $200,000 to employ AlphaRoute, which utilizes AI to oversee their bus system. The system was a a disaster, as the bus did not properly detect traffic patterns and account for additional stops and accurate stopping times. AlphaRoute apologized and stated, “We have been working alongside the district to fix as many issues as possible as fast as possible.”

What are people saying? The community is rightfully upset, and many bus drivers say the money could’ve been better used to hire new human bus drivers. Parents are the most frustrated, however. As one upset mother remarked, “Every parent deserves to have their child home from school before they have dinner.”

What does this mean? This problem is bigger than AlphaRoute. The bus failure highlights the importance of thorough testing and responsible development before employment of such AI-driven technology.

AI IS HEADED TO SPACE 🪐

Q: Why aren’t astronauts hungry when they get to space?

A: They had a big launch. 🚀

What’s new? New York-based startup Wallaroo Labs, also known as Wallaroo.ai, secured a $1.5 million contract from the U.S. Space Force through SpaceWERX to advance its machine learning models for edge computers in space. 🚀

What does machine learning in orbit look like? The Space Force seeks AI and machine learning capabilities for both cloud and edge deployment in space missions. Edge computing involves placing computing power closer to data sources like space sensors. Wallaroo.ai aims to showcase the use of machine learning models in this way by using radiation-tolerant commercial integrated circuits.

What’s the purpose? Vid Jain, CEO of Wallaroo.ai, said, “The ability to deploy, manage, and maintain ML models at the edge, on-orbit, and within the constraints of available hardware, limited compute, limited power, in the hostile environment that is space is critical to the development of the space industry.” Machine learning in orbit, he said, “promises to transform automation in space by leveraging AI for robotics, refueling, and protecting satellites from space debris.”

NEW AI-GENERATED NEWS REPORTING GUIDELINES 🗞️

New news by new AI could have new implications which requires new guidelines. 📰

What’s up? The Associated Press (AP), a renowned news organization, has recently released new guidelines for its journalists regarding the utilization of AI in news gathering. Furthermore, the AP has also entered into a collaboration with OpenAI, the creator of ChatGPT, allowing its news stories to train generative AI models.

What are the guidelines? Journalists within the AP are encouraged to experiment with tools like ChatGPT as valuable resources but are cautioned to verify the truth of the AI-generated information by crosschecking it with reliable sources. The new guidelines also prohibit using AI to generate publishable content. Furthermore, the AP has asserted its commitment to maintaining the authenticity of visual and auditory content, refraining from AI-based alterations to media unless the AI-generated content itself is the subject of the news, in which case proper labeling is to be implemented.

What is the purpose? In a landscape where news outlets increasingly deploy automated tools for content creation, AP's balanced approach underscores the need for responsible AI integration, maintaining journalistic ethics while harnessing the potential of AI advancements. As a prominent influencer in the journalism industry, AP's stance and guidelines for AI usage could set an example and shape the ongoing debate over the integration of AI into news production.

FRY-AI FANATIC OF THE WEEK 🍟

Congrats to our subscriber, cwhite3! 🎉

cwhite3 gave us a fresh and crispy and commented, “I appreciate all the work you guys do for us. I am always excited about new information regarding technology to enhance our lives. You guys rock!”

(Leave a comment for us in any newsletter and you could be featured next week!)

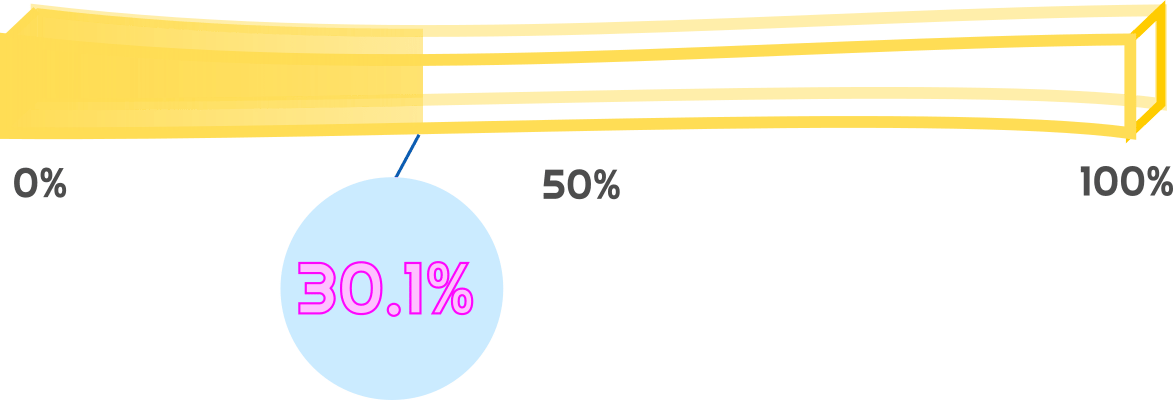

HAS AI REACHED SINGULARITY? CHECK OUT THE FRY METER BELOW

The Singularity Meter jumps above 30% for the first time ever. Snapchat’s “My AI” has a mind of its own.