Good morning, and welcome to a wonderful new week of AI updates. Okay, enough small talk—let’s dive in! 🦾

Check out this new, FryAI original tool: XPressPost, which lets you generate engaging tweets (posts) from any URL in seconds! 🙌

🤯 MYSTERY AI LINK 🤯

(The mystery link can lead to ANYTHING AI related. Tools, memes, articles, videos, and more…)

Today’s Menu

Appetizer: ChatGPT shows 83% error on pediatric diagnoses 😳

Entrée: OpenAI to pay $1-5 million for news articles 📰

Dessert: NIST report says AI systems are vulnerable to cyber threats 🤖

🔨 AI TOOLS OF THE DAY

👍 Mine My Reviews: An AI review scraping tool that quickly analyzes your customers’ online reviews and testimonials. → check it out

👤 M1 Project: Create detailed Ideal Customer Profiles for your business in minutes. → check it out

🧙♂️ Respell: A no-code platform that allows you to explore and create AI-powered automations called “spells.” → check it out

CHAT-GPT SHOWS 83% ERROR ON PEDIATRIC DIAGNOSES 😳

An apple a day keeps the AI away. 🍎

What happened? According to a recent study published in JAMA Pediatrics, AI displayed an 83% error rate when diagnosing illnesses in children.

What was the study? The study was conducted by researchers at Cohen Children’s Medical Center in New York and pitted ChatGPT-4 against 100 pediatric case challenges published between 2013 and 2023. The shocking results highlighted the intricacies of pediatric cases as well as the work that needs to be done to improve AI before it is fully trusted in the medical field. In the defense of AI, pediatric diagnoses are notably challenging due to the limited ability of young children to articulate their symptoms. However, these findings underscore the indispensable role of human clinical experience in medical diagnostics, indicating that AI is not ready to replace pediatricians.

What now? Far from discouraging developers, this test underscored ways to improve AI’s use in pediatric diagnoses. Potential improvements might include training the AI with more accurate and reliable medical literature and providing real-time access to medical data for continuous tuning and refinement. So despite its current shortcomings, the interest in integrating AI chatbots into clinical care remains high, presenting an opportunity for further research and development in the field.

OPEN-AI TO PAY $1-5 MILLION FOR NEWS ARTICLES 📰

I bought a newspaper and it immediately gave me bad news ... Needless to say, it was tear-ible! 😆

What’s going on? Amidst allegations from the New York Times for unlicensed training, OpenAI is reported to offer between $1 million and $5 million annually to license copyrighted news articles for training its AI models.

Who else is after the news? Apple is reportedly offering at least $50 million over a multiyear period to media companies for AI training data. Meta is allegedly offering $3 million yearly for news stories. Google, in 2020, pledged $1 billion to partner with news organizations and recently agreed to pay Canadian publishers $100 million annually.

What is the significance? There are two takeaways from this trend. Firstly, there is a battle going on to get legal access to the material to use for training AI models. Secondly, this trend highlights a deeper concern about copyrights and the personhood of AI. If humans are able to learn from publicly available, copyrighted sources, shouldn’t an AI be able to do so also? Questions such as this will continue to arise as we watch these deals unfold.

NIST REPORT SAYS AI SYSTEMS ARE VULNERABLE TO CYBER THREATS 🤖

Q: Why couldn’t the police arrest the hacker?

A: He ransomware. 🏃♂️

What’s new? The U.S. Department of Commerce’s National Institute of Standards and Technology (NIST) recently released a report on “Trustworthy and Responsible AI,” unveiling the vulnerabilities of AI systems to cyber threats.

What does the report say? The report identifies four types of cyber-attacks (poisoning, abuse, privacy, and evasion attacks) each posing unique risks to AI systems. From manipulating training data to injecting false information into sources, the report outlines how adversaries are able to compromise AI integrity.

“Despite the significant progress AI and machine learning have made, these technologies are vulnerable to attacks that can cause spectacular failures with dire consequences.”

What does this mean? As AI permeates our connected society, the NIST report underscores the urgency of addressing these vulnerabilities. The difficulty of making an AI model unlearn malicious behaviors amplifies the need for robust cybersecurity practices. While foolproof methods remain elusive, the report calls for more responsible, secure development processes.

MEME MONDAY 🤣

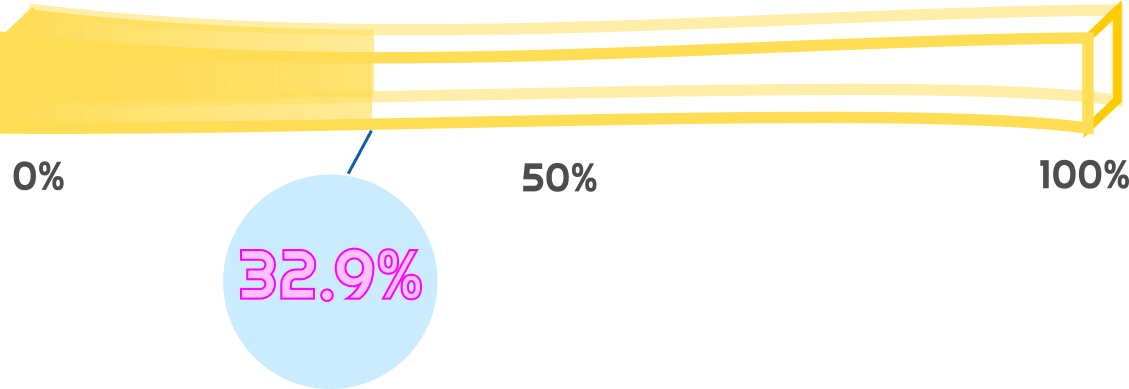

HAS AI REACHED SINGULARITY? CHECK OUT THE FRY METER BELOW

The Singularity Meter jumps 0.5%: Microsoft to implement an “AI” key on future PC keyboards